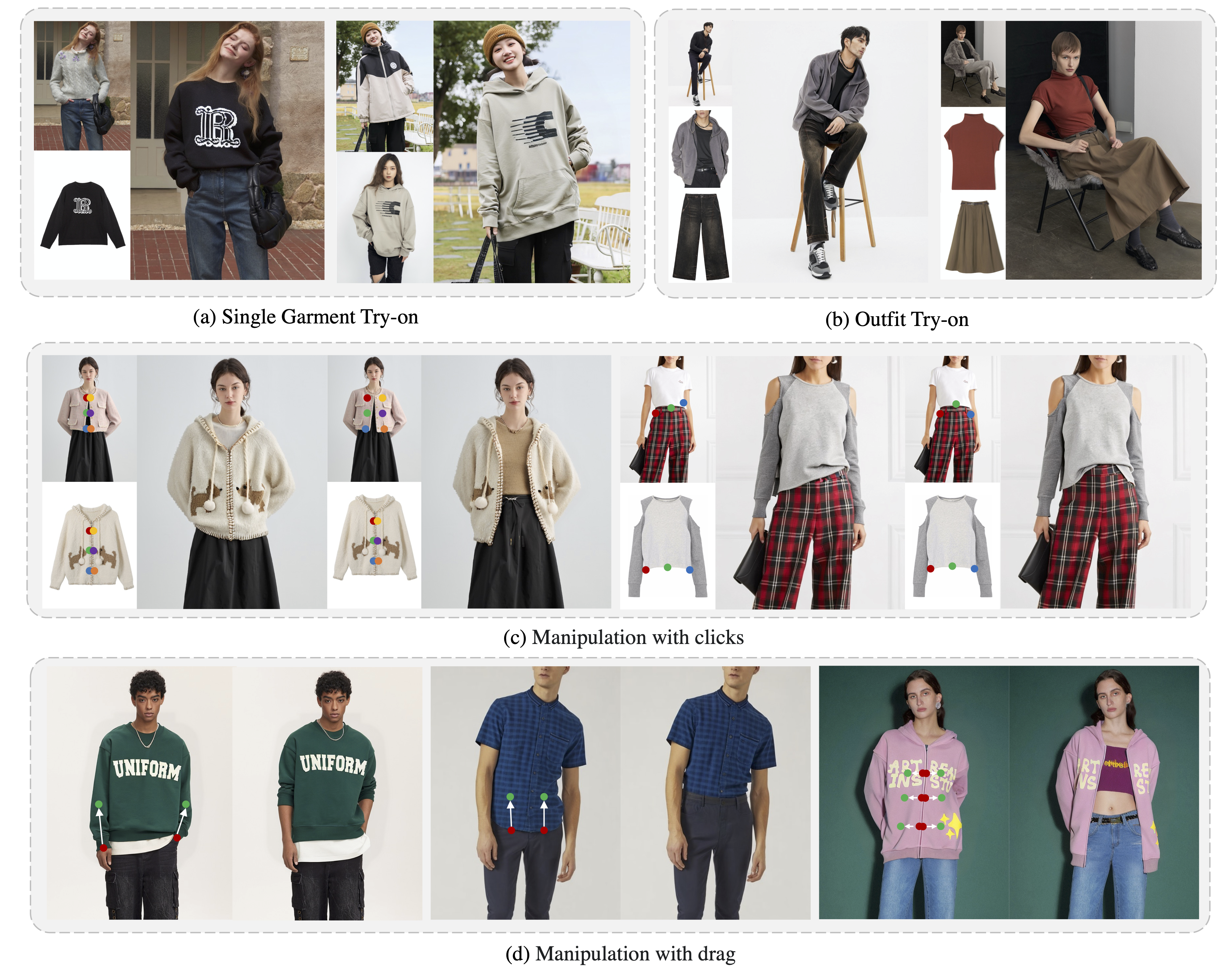

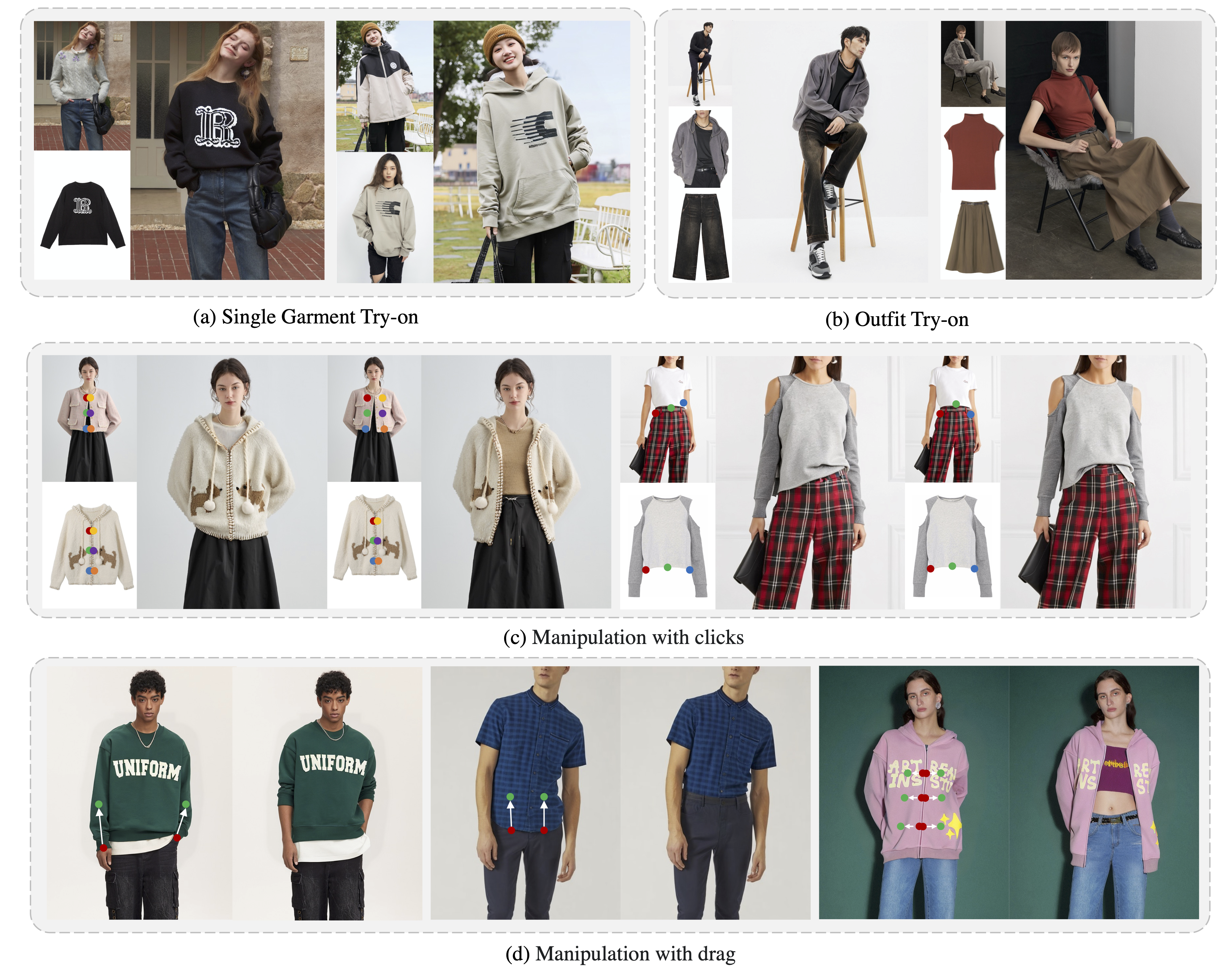

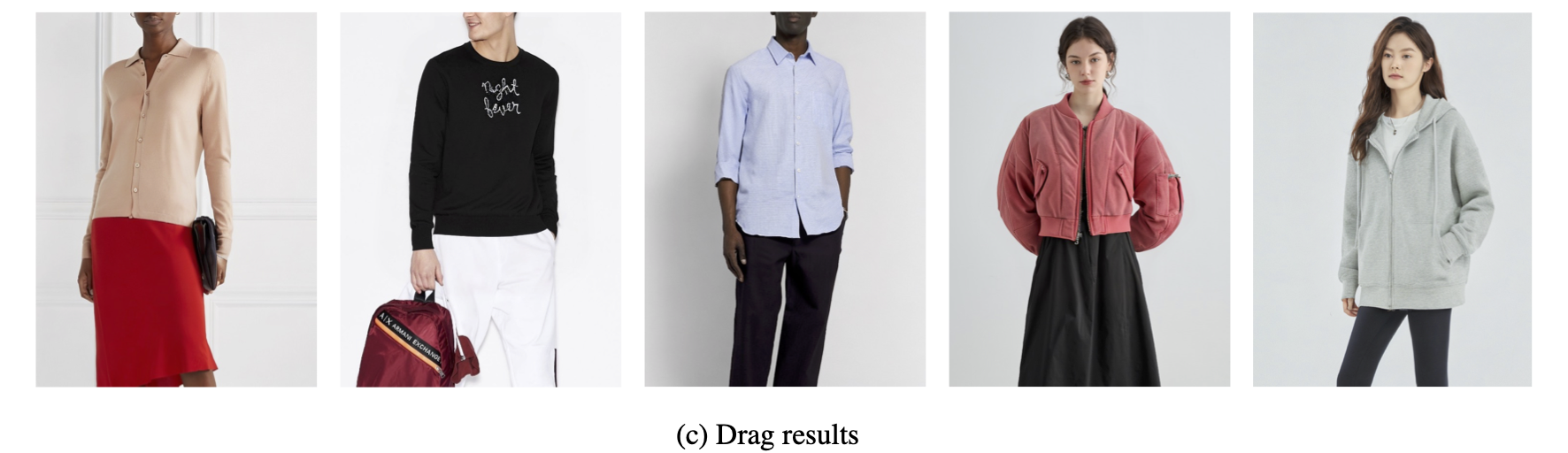

This paper introduces a novel framework for virtual try-on, termed Wear-Any-Way. Different from previous methods, Wear-Any-Way is a customizable solution. Besides generating high-fidelity results, our method supports users to precisely manipulate the wearing style. To achieve this goal, we first construct a strong pipeline for standard virtual try-on, supporting single/multiple garment try-on and model-to-model settings in complicated scenarios. To make it manipulable, we propose sparse correspondence alignment which involves point-based control to guide the generation for specific locations. With this design, Wear-Any-Way gets state-of-the-art performance for the standard setting and provides a novel interaction form for customizing the wearing style. For instance, it supports users to drag the sleeve to make it rolled up, drag the coat to make it open, and utilize clicks to control the style of tuck, etc. Wear-Any-Way enables more liberated and flexible expressions of the attires, holding profound implications in the fashion industry.

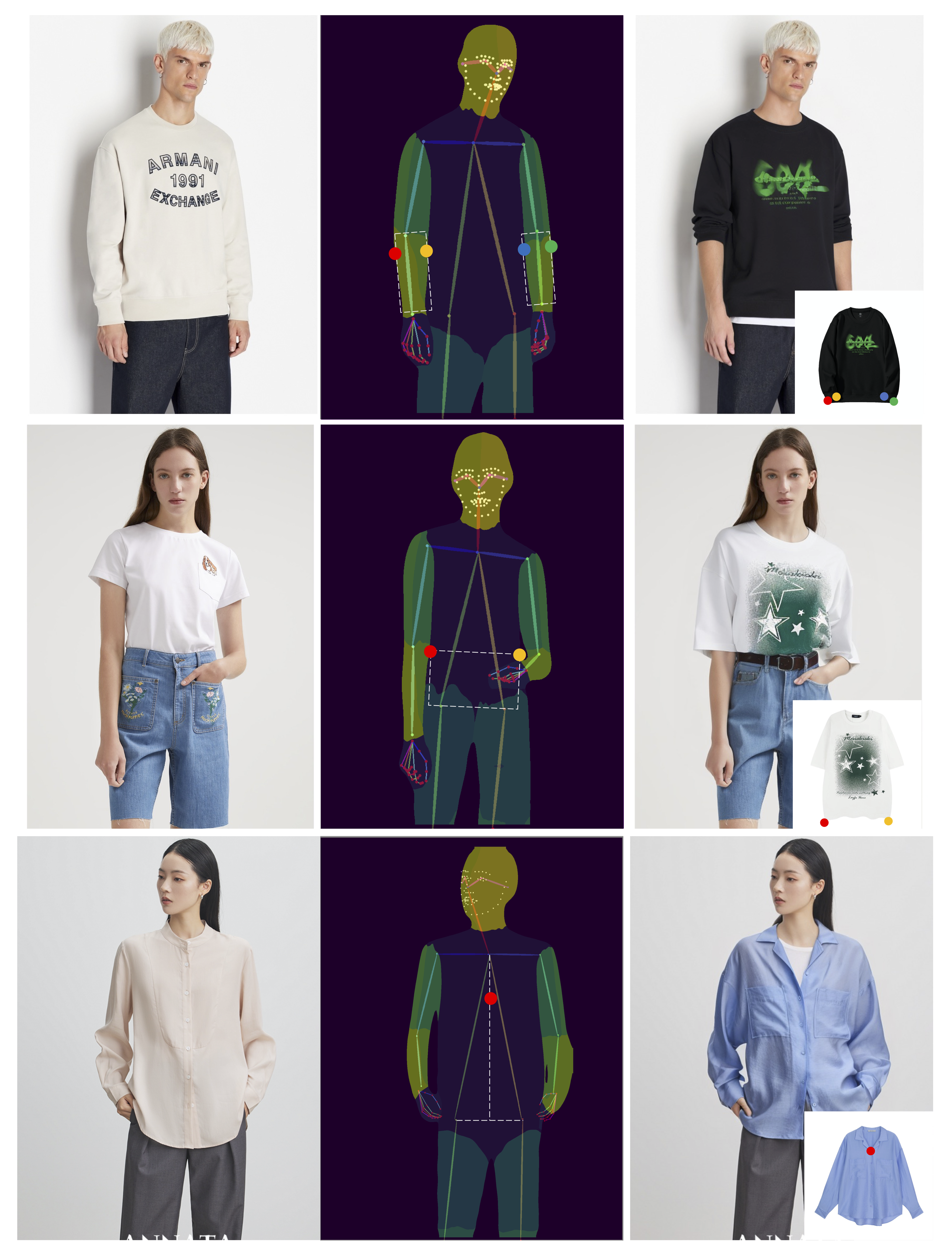

Wear-Any-Way supports users to assign arbitrary numbers of control points on the garment and person image to customize the generation, bringing diverse potentials for real-world applications.

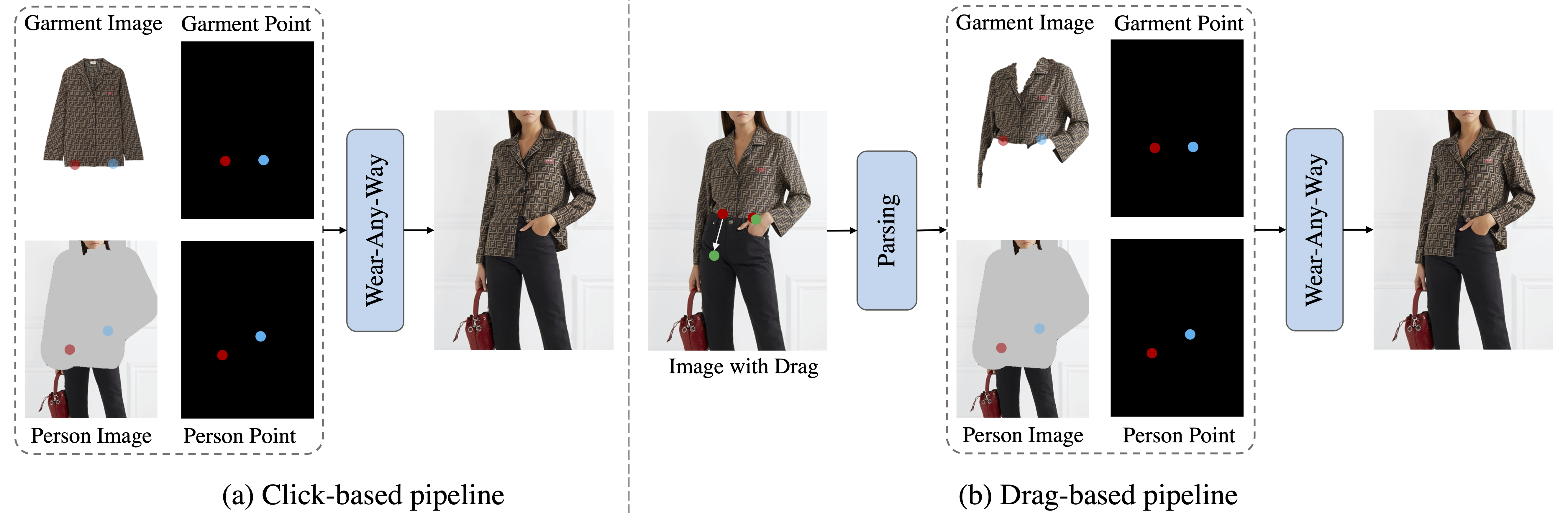

For click-based control, users provide garment images, person images, and point pairs to customize the generation. When the user drags the image, the starting and end points are translated as the garment and person points. While the parsed clothes are regarded as the garment image. Thus, the drag could be transformed into the click-based setting.

We could calculate the control points by incorporating both the skeleton map and the dense pose. Thus, we could control the wearing style by setting different hyperparameters.

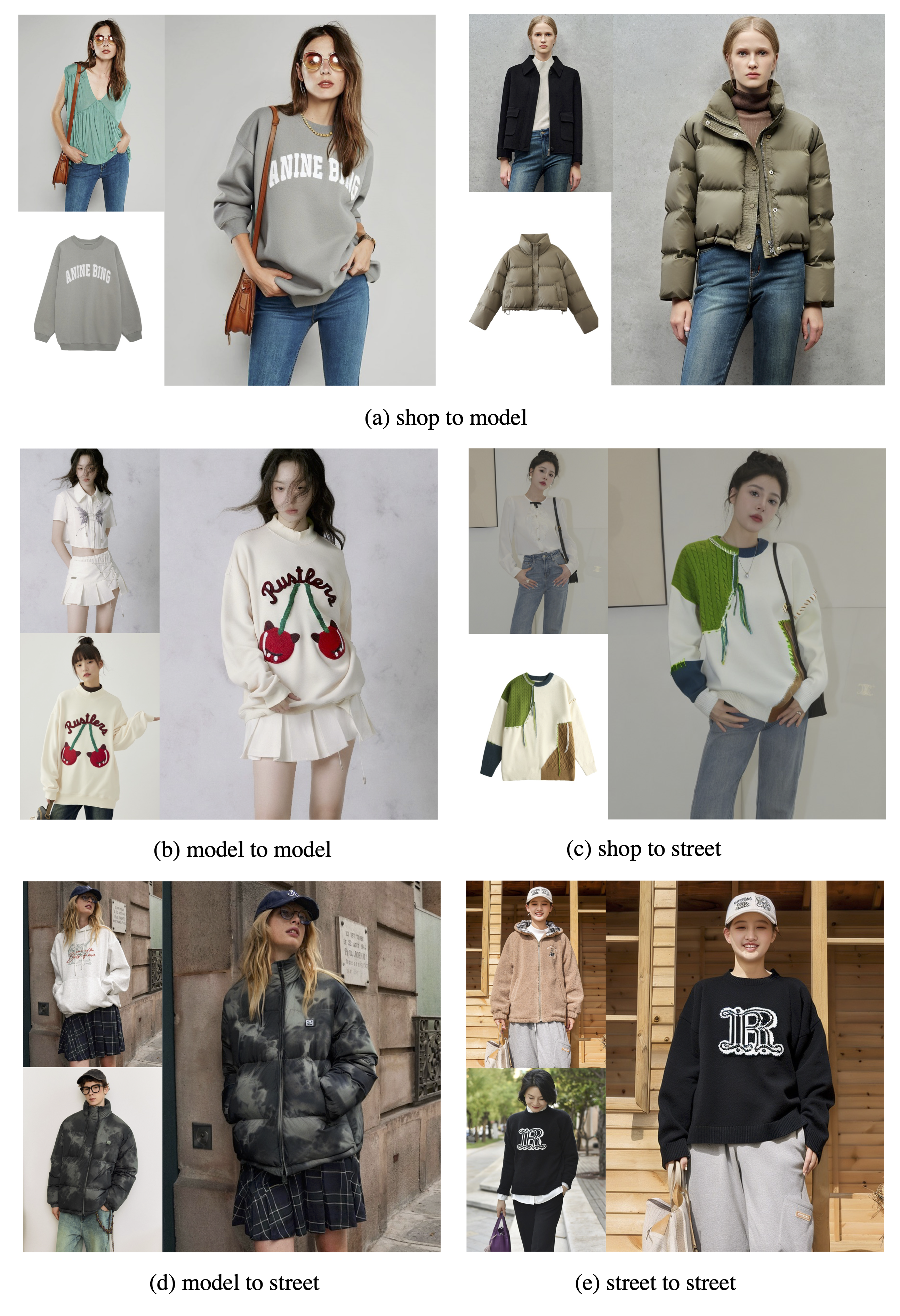

Wear-Any-Way supports various of input types including shop-to-model, model-to-model, shop-to-street, model-to-street, street-to-street, etc.

Wear-Any-Way enables users to provide upper- and down-clothes simultaneously and generates the try-on results in one pass.

@article{chen2024wear,

title={Wear-any-way: Manipulable virtual try-on via sparse correspondence alignment},

author={Chen, Mengting and Chen, Xi and Zhai, Zhonghua and Ju, Chen and Hong, Xuewen and Lan, Jinsong and Xiao, Shuai},

journal={arXiv preprint arXiv:2403.12965},

year={2024}

}